Building Trust in Agentic Systems: The Human-in-the-Loop Framework

- Subhasish Karmakar

- Nov 26, 2025

- 5 min read

“The real question is not ‘Can the agent act?’ It’s ‘Do we trust it to?’”

— A truth every design and product leader is waking up to.

The future of work isn’t about choosing between humans and AI — it’s about designing the handoff. As autonomous AI agents increasingly handle complex tasks without human intervention, from monitoring security threats to managing customer relationships, one question becomes paramount:

How do we build trust in systems that think and act on their own?

It’s not a philosophical puzzle — it’s a business risk.

According to recent research, organizations that fail to establish trust could see a 50% decline in model adoption, business goal attainment, and user acceptance by 2026. Yet today, only 7% of desk workers consider AI results trustworthy enough for job-related tasks.

Trust isn’t lagging behind the tech curve — it is the curve.

The Autonomy-Trust Paradox

Here’s the paradox: the more autonomous an agent becomes, the less transparent its operations are to users.

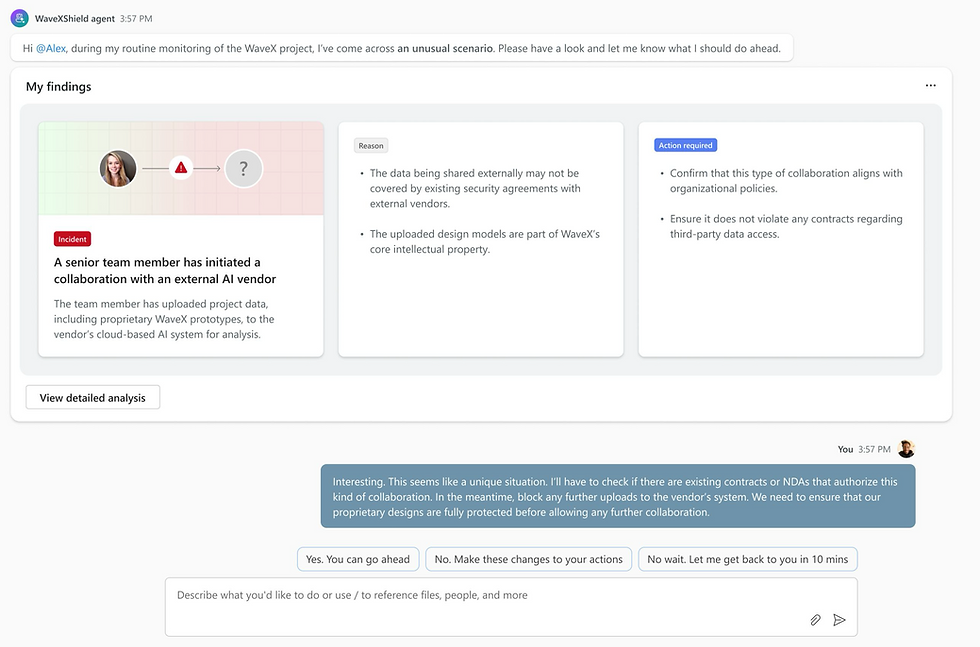

Traditional software required explicit user commands for every action. Copilots fill in the gaps with suggestions. But autonomous agents? They perceive, reason, plan, and execute — often while we’re not even watching.

This shift represents a fundamental change in interaction design. We’re moving from “User takes the decision” to “User mostly reviews and approves.” The interface itself becomes optional. The experience shifts from active to passive.

But passive doesn’t mean powerless. The key is building what researchers call calibrated trust — trust that matches the actual trustworthiness of the system.

The Human-in-the-Loop Framework

Drawing from recent work in AI agent design, a structured approach to building trust emerges around several critical phases:

1. Onboarding with Transparency

Trust begins before the agent takes its first action. When recruiting an agent, users need to understand:

What the agent can and cannot do

Its past performance metrics

The data sources it will access

Its decision-making approach

Reliability is critical to trustworthiness — AI systems must perform consistently across diverse scenarios and handle edge cases effectively. But competence alone isn’t enough. An AI agent that is competent but not warm might not be trusted, because intention is crucial. The agent must demonstrate both capability and benign intent.

2. Training and Simulation

Before going live, agents should learn from historical data — essentially apprenticing with human experts. One effective pattern involves:

Learning phase: The agent studies how humans previously handled similar scenarios

Simulation phase: The agent runs in a sandbox environment, making recommendations without taking action

Review phase: Humans evaluate the agent’s simulated performance

This approach addresses a fundamental insight from trust research: training and building familiarity are critical, and AI-supported simulations allow for a smoother transition between manual and AI-supported operations.

3. Graduated Autonomy

Trust isn’t binary — it’s a slider. The framework introduces an Autonomy-Trust Slider where:

Low autonomy, high transparency: Agent shows all reasoning, requires approval for every action

High autonomy, lower visibility: Agent handles routine tasks independently, surfaces only exceptions

The greater the trust, the more confident humans feel making the agent autonomous. But this progression must be earned, not assumed.

Research shows that 63% of workers believe human involvement will be key to building trust in AI, which is why leading organizations are implementing what they call “Mindful Friction” — intentional pauses in AI processes at critical junctures to ensure human engagement.

4. Reasoning on Demand

Here’s a counterintuitive insight: showing users how an agent thinks is more important than showing what it does.

The framework separates:

Output (the visible result — a plan, a recommendation, an action)

Reasoning (the thought process that led to that output)

Users can access the agent’s reasoning on demand — understanding which data sources it consulted, what constraints it considered, what alternatives it rejected. This addresses the “black box” problem that has plagued AI adoption for years.

AI transparency and explainability can help users develop appropriate understanding and trust, with explanations useful for understanding model behavior and calibrating trust. However, transparency alone is insufficient for accountability, as explanations can still be highly technical and challenging to parse.

The solution? Progressive disclosure: surface high-level reasoning by default, with the ability to drill down into technical details when needed.

5. Graceful Failure Handling

Perhaps the most trust-building moment isn’t when an agent succeeds — it’s when it admits it doesn’t know what to do.

When confronted with novel scenarios outside its training, a trustworthy agent should:

Recognize the situation is outside its experience

Pause any automated actions

Surface the issue to a human with context

Learn from the human’s decision for future encounters

This humility — this willingness to say “I need help” — may be the most human quality we can build into autonomous systems.

The Design Language of Trust

Beyond the framework, specific design patterns support trust:

Celebrating screen real estate: Chat becomes the protagonist. By maximizing conversational space and minimizing clutter, designers keep focus on the human-agent dialogue rather than overwhelming users with controls.

Structure and consistency: When everything else is unpredictable (the agent’s autonomous actions), the interface itself must be a model of reliability. Consistent layouts and visual elements create a sense of stability.

Context sensitivity: Trust grows when systems demonstrate awareness. The interface should adapt to show relevant options based on the user’s current situation and immediate

The Path Forward

Recent research confirms that competence is the key factor influencing trust in automation, but when users observe errors, their trust decreases. This makes the graduated autonomy approach — where agents prove themselves before gaining full independence — not just psychologically sound, but operationally essential.

Conclusion: Trust as a Feature, Not a Bug

The future of agentic AI isn’t about removing humans from the loop — it’s about redesigning the loop itself. It’s about creating systems that are:

Transparent enough to understand

Humble enough to ask for help

Adaptive enough to learn from corrections

Competent enough to handle routine tasks independently

As organizations bet their futures on autonomous AI, the critical factor that can stall transformation is trust. But trust isn’t a given — it’s designed, earned, and maintained through deliberate patterns that keep humans meaningfully in the loop.

The agents that succeed won’t be the fastest or most autonomous. They’ll be the most trustworthy. And trustworthiness, it turns out, is a design problem as much as a technical one.

This article draws from research in autonomous agent design, human-AI interaction, and enterprise AI implementation. For organizations looking to implement agentic systems, the human-in-the-loop framework offers a practical roadmap from onboarding through scaled autonomy — always with trust at the center.

References:

Jyoti, R. (2024). “Building trust in autonomous AI: A governance blueprint for the agentic era.”

CIO Magazine.“Establishing trust in artificial intelligence-driven autonomous healthcare systems” (2024). PMC.“Autonomous AI Agents Are Coming: Why Trust and Training Hold the Keys to Their Success” (2025).

Salesforce.Li, Y., Wu, B., Huang, Y., & Luan, S. (2024). “Developing trustworthy artificial intelligence.”

Frontiers in Psychology.Kim, S. S. Y. (2024).

“Establishing Appropriate Trust in AI through Transparency and Explainability.” CHI Conference.

Comments